NESS objectives:

NESS is a sound spatialization software. It allows recreating a coherent soundstage for a wide listening area (no sweet spot) thanks to a broadcasting loudspeaker setup, by placing virtual audio objects in a given venue. NESS allows spatializing up to 16 sound sources on 4 groups of speakers, for a total of 32 outputs. NESS also integrates a reverberation engine to synthetize coherent acoustic spaces, and trajectory widgets for a user-friendly management of source movements.

Perceived location of a sound source on a loudspeaker setup

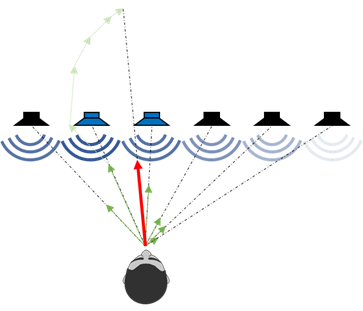

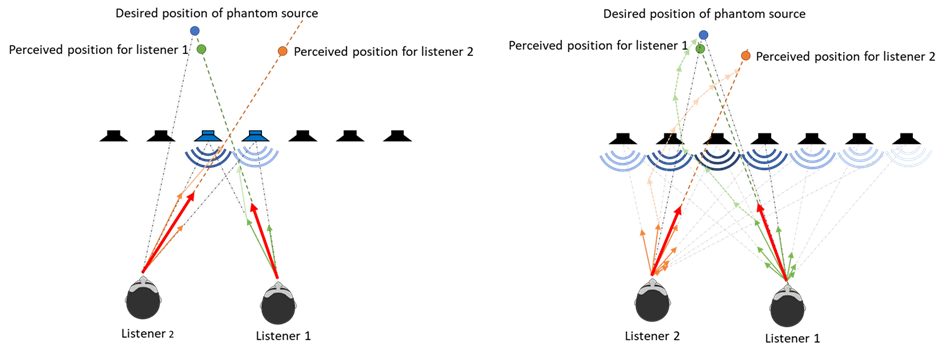

When a signal arrives from multiple directions to our ears with arrival time differences under 30ms, our brain considers it as coming from a unique source. The perceived direction of this fused source depends on the eared amplitudes and delays of the signal for each loudspeaker. The sound source will generally be perceived in the direction of the firs incoming signal or closest speaker (precedence effect) or from the speakers with the highest intensity (energy vector model). The contribution of all speakers can be analyzed thanks to psychoacoustic models to predict the perceived direction of the sound source [1].

On this figure, the most contributing loudspeakers (the closest or with the highest intensity) are colored in blue. The contribution of each speaker is represented with the length of the vector pointing in its direction (energy vector). By summing all vectors, the perceived direction (in red) can be modelized.

The algorithm used in NESS are chosen in a way to minimize the localization error of the sound sources for a large audience area.

Existing sound spatialization methods

Many sound spatialization method exists. Here is a comparative study of the most used ones:

VBAP

Principle

The VBAP method (vector based amplitude panning) generalize the amplitude panning methods used to mix stereo signals to a given loudspeaker arrangement. Only the closest speakers on each side of the direction of the source plays the signal of the source. The gains associated to each speaker is computed from the listening position, the speaker positions and the virtual source position, using the principle of energy vector summation.[2]

Advantages

- Very good rendering of the angular location at the listening position

- Low complexity of the algorithm

Drawbacks

- The speakers must be equidistant from the listener, and are often standardized, which can be hard to reproduce depending on the installation constraints.

- Each speaker must cover the whole listening area unless some sources are not audible for some listening positions.

- Presence of a sweet spot: the spatialization is only valid at the listening position used to compute the panning gains.

DBAP

Principle

The DBAP method (distance based amplitude panning) does the spatialization of a virtual sound source by computing the acoustic attenuation due to the sound propagation between the sound source and each loudspeaker. The position of the listener is not taken in account, and all speakers play the signal.[3]

Advantages :

- No sweet spot, the sound spatialization is better for a large audience area

- Can be adapted to irregular loudspeaker topologies.

- The source signal is audible on all loudspeakers.

Drawbacks :

- The spatialization performances are limited for listening positions close to the loudspeaker, because of the precedence effect.

- The distances and acoustic propagation computation are more CPU intensive.

Comparison of localization error for VBAP (left) and DBAP (right)

WFS

Principle

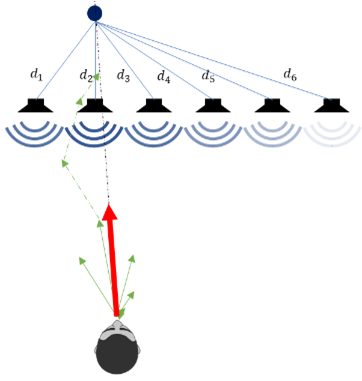

The WFS method (wave field synthesis) is based on the Huygens-Fresnel principle, which says that any wave front can be decomposed as a superposition of elementary waves. A large number of speakers can then be used as elementary sources to recreate an acoustic wavefront. A virtual sound source can be spatialized by recreating the wavefront that would have been created by a real source at the same position. This can be done for virtual sources located behind the speakers by computing the delays and attenuation due to the acoustic propagation between the virtual sound source and each loudspeaker. The calculations are similar to the DBAP method, on which delays are added. The WFS principle can be also extended to recreate wavefronts for sources located in front of the speakers in the listening area. [4]

Advantages

- The sound spatialization is correct for the whole listening area.

- The sound field is accurately reproduced.

- Sources can be reproduced inside the listening area.

- The precedence effect is taken in account in the algorithm.

Drawbacks

- A large number of loudspeakers, close one from each other must be used

- Heavy computational load due to the high number of channels

- Very expensive and not suitable for touring setups

- Aliasing can occur at high frequency depending on the spacing of the loudspeakers.

NESS

Used principles

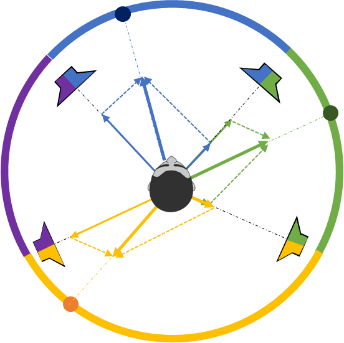

The spatialization algorithms of NESS uses the principles of WFS. Gains for each speakers are computed from the DBAP method [3], and delays are added to the signal as described for the WFS algorithm. Adding delays reinforces the sound spatialization and the focusing of sound sources by modifying the time of arrival of all speaker signals, which creates an illusion of a precedence effect in the direction of the sound source for the whole listening area. Extended energy vector perception models demonstrate the significative reduction of the localization error and of the perceived width of the source.[5] [1]. The DBAP method also allows using irregular loudspeaker setups.

Comparison of perceived directions of arrival and width of a phantom source with NESS algorithm, with and without the use of delays

Speaker setup adaptation

The use of the “blur” and “rolloff” parameters of the DBAP alorithms [3] ensures the rendering of sound sources without artifacts due to their relative positions to the speakers.

The « blur » parameter allows smoothing the gains variations when the sources are close to the loudspeakers, or when the spacing of the speakers are too big, by adding a tunable offset in the distance computation

Comparison of the perception of a source in movement with different values of blur. with a higher blur value, a movement close to the speakers is smoother

Setting the “rolloff” parameter allows to intensify or smooth the panning effect by tuning the acoustic distance attenuation law. A higher rolloff allows increasing the gain differences between the speakers on small setups.

Comparison of spatialization gains (in yellow) with different values of rolloff (general focus in NESS). A higher value leads to more punctual sources but can increase the perceived direction error on large stages

NESS also includes functions derived from the perception model to adapt the perceived width of the sound sources, or the changes of the perceived intensity vs the distance.

Reverberation Engine

The NESS reverberation engine is based on a convolution and a distance law. The distance law allows to customize the amount of reverberation for distant sources and computes automatically the pre-delays. it is common to all sources to ensure a coherent acoustic space. Depending on the spatialization setup, 3 modes are available: surround for 360° setups, frontal for theaters or live concerts and 180° for hemispheric artistic installations.

NESS comes with its own set of Impulse response, but you can add easily your own IR files.

Movements management

NESS allows for each source editing its movement thanks to a trajectory editor. The trajectory allows controlling both position and speed of the audio object. Each segment of the trajectory is travelled with the same time, when consecutive points are close, the speed decreases and vice versa.[6]

When the source is moving, the computed delays for each speakers vary, which can create a doppler effect. The use of interpolated delay lines and the smoothing of those delays with a 2nd order filter ensures that no click or artifact occurs and a significant attenuation of the frequency shift due to the doppler effect. The smoothing time constant can be tuned in the spatialization settings.

Bibliography

[2] V. Pulkki, « Spatial sound generation and perception by amplitude panning techniques », Helsinki University of Technology, Espoo, 2001.

[3] T. Lossius, « DBAP – Distance-Based Amplitude Panning », p. 5.

[4] A. J. Berkhout, « A Holographic Approach to Acoustic Control », J. Audio Eng. Soc, vol. 36, no 12, p. 977‑995, 1988.

[5] J. C. Middlebrooks, « Sound localization », in Handbook of Clinical Neurology, vol. 129, Elsevier, 2015, p. 99‑116.

[6] D. Jacquet, « Procédé de spatialisation sonore FR3113993A1.pdf », 9 septembre 2020. https://patentimages.storage.googleapis.com/f0/38/d4/019cc21ff880d0/FR3113993A1.pdf